Category: Others

-

Generate Images using AI – Stable Diffusion

Using stable diffusion on Mac M1 system

-

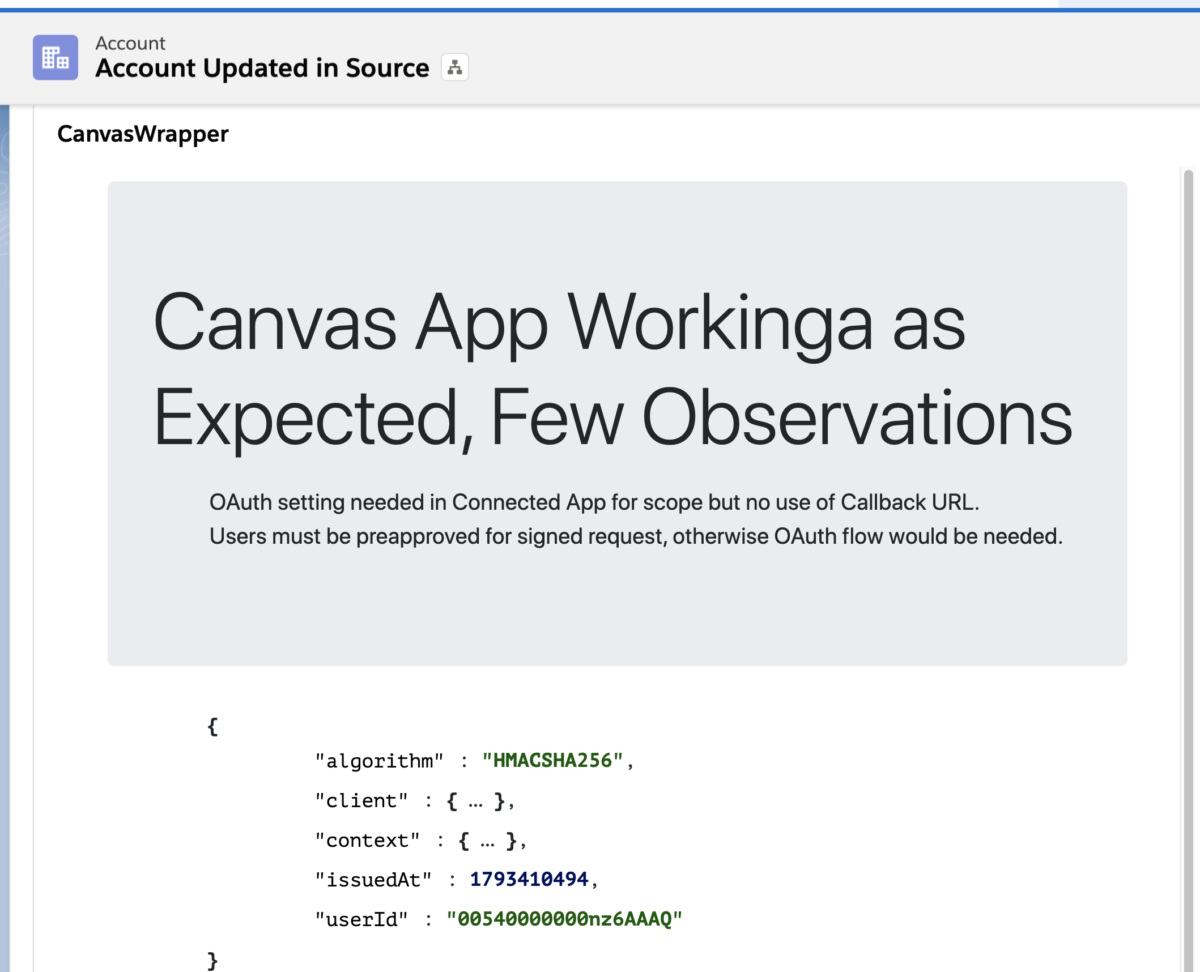

Salesforce Integration with Nodejs based applications using Canvas

How to use Canvas Signed Request Authentication with web based applications like Nodejs & how to use Canvas LifeCycle Handler Class

-

Shell Script – Read all file names in Git Pull Request

Shell script to read all files part of Pull Request & iterate through them

-

Frequently Used Git Commands

Most frequently used Git commands by developers

-

What is Micro Services

I have read many posts and watched video to understand Microservices precisely however I found Martin Fowler’s explanation about Microservices most helpful. This blog post is just the recap & summary of what I understood about Microservices Architecture. Characteristics of Microservices Build services in form of Components Components can be independently replaceable and upgradable Components…

-

Definition of Frequently Used Database Architecture Related Terms

Definitions of Data warehouse, Data lake, Data Mart, Operational Data Store

-

Housie / Tambola / Bingo Game Number Generator

Online free Housie / Tambola / Bingo Game. Simple to use and play with Bingo Number descriptions

-

How to setup Git Server using Bitvise SSH

Step by step guide to setup Git Server using Bitvise SSH Server

-

How to use Symbolic Link to move Google Chrome AppData folder to other location

Using Symbolic Link to move high storage folders to new location like moving Google Chrome AppData folder to other location

-

Fix Git errors : Permission denied , Cannot spawn , No supported authentication methods available

Recently, I came across few errors of Git and found very time consuming to fix those. Let’s discuss what are those errors and how we can fix it. Error : Permission denied (publickey). fatal : could not read from remote repository This error came while trying to push changes to remote repository using ssh keys.…